Deep-dive: Engineering a Serverless Prediction Market

Original Website (Deprecated): https://bet-jee.vercel.app/

1. Introduction: The "Meme" Economy

In the Indian engineering ecosystem, the Joint Entrance Examination (JEE) is the ultimate arbiter of fate. As the exam happens across multiple days and shifts, the difficulty varies. Students obsess over which shift was the "hardest", often betting that their shift was the toughest by human nature (since it's a percentile-based exam).

It started as a meme on r/JEENEETARDS (https://www.reddit.com/r/JEENEETards).

Post made: Reddit Thread

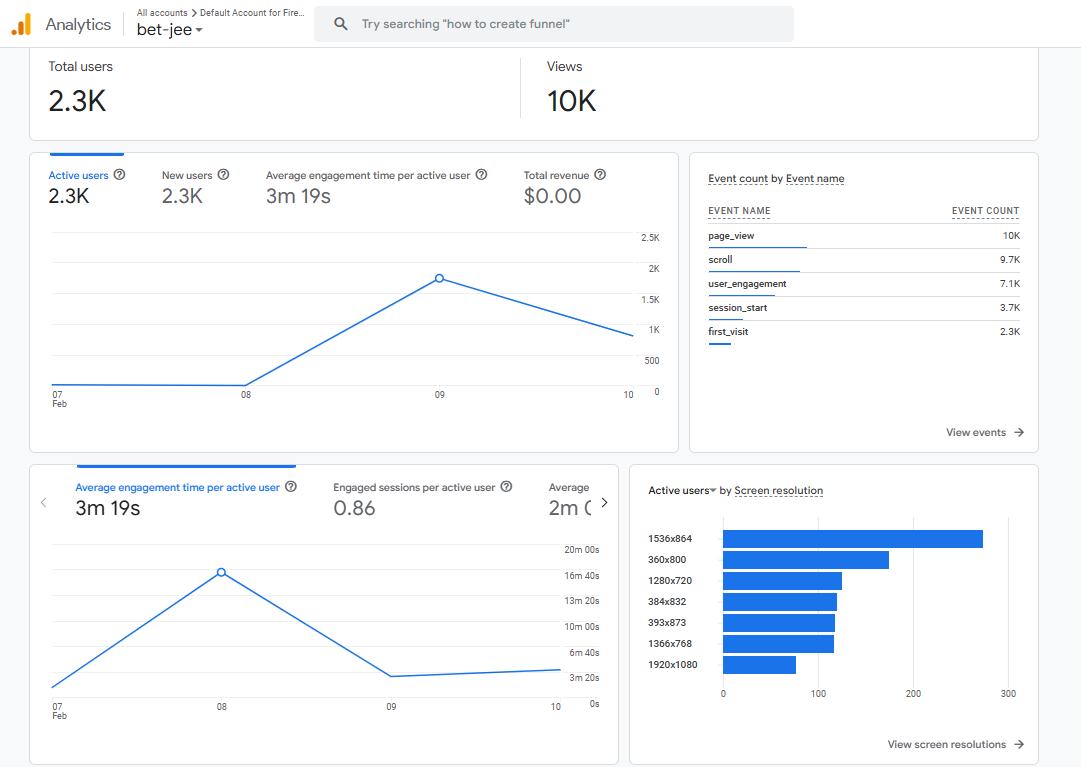

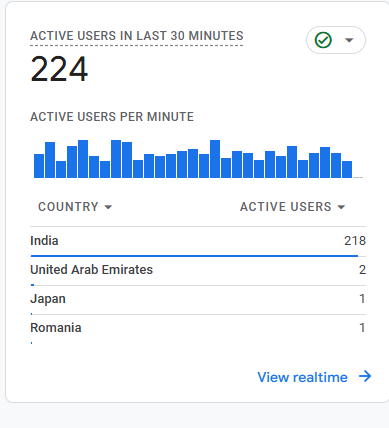

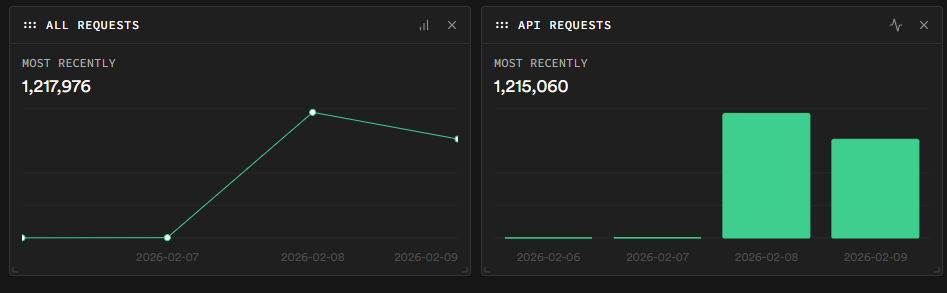

I realized this was a perfect use case for a Prediction Market—an exchange where the price of an asset (a specific shift) reflects the collective belief of the crowd. I built BetJee to test this hypothesis. It spiraled from a weekend project into a distributed system handling 1.2 million requests.

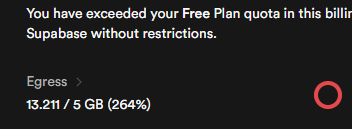

This is a deep dive into the architecture, the math behind the economy, and why I had to shut it down due to database egress limits (264% of quota) and my upcoming 12th-grade Board Exams eating up my time.

2. Database Architecture: The Ledger

Because this was a financial system (even with fake currency), data integrity was crucial. I designed a normalized PostgreSQL schema.

A. Schema Definitions

I separated user identity (`users`) from authentication secrets (`accounts`) to ensure security.

The Market State:

This table held the live state of every shift. I used an integer for `remaining_shares` to represent the liquidity pool.

Markets were fixed to be 21s1, 21s2, 22s1, 22s2, 23s1, 23s2, 24s1, 24s2, 28s1 and 28s2.

CREATE TABLE markets (

shift_id INTEGER PRIMARY KEY,

code TEXT NOT NULL, -- e.g., "27 JAN S1"

remaining_shares INTEGER NOT NULL, -- The liquidity pool

updated_at TIMESTAMP WITH TIME ZONE DEFAULT NOW()

);

The Immutable Ledger:

Every trade was recorded here. This allowed me to reconstruct the entire market history if the state ever corrupted. I enforced an immutable record using `GENERATED ALWAYS AS IDENTITY`.

Note on Short Selling: The schema supports negative integers for shares. This enabled Short Selling—allowing users to bet against a shift being hard. If a user sold shares they didn't own, they entered a negative position (e.g., -10 shares), effectively increasing the liquidity pool and driving the price down.

CREATE TABLE transactions (

id BIGINT PRIMARY KEY GENERATED ALWAYS AS IDENTITY,

user_id TEXT REFERENCES users(id),

shift_id INTEGER REFERENCES markets(shift_id),

shares INTEGER NOT NULL, -- Positive for Buy, Negative for Sell

price NUMERIC NOT NULL, -- Execution price

type TEXT CHECK (type IN ('buy', 'sell')),

timestamp TIMESTAMP WITH TIME ZONE DEFAULT NOW()

);

The Defense Layer:

There were around 800 bot accounts created on the initial Linear-bonding curve model, manipulating the market. To fight botnets, I tracked IP addresses in a Redis-like structure within Postgres to rate-limit trades.

CREATE TABLE ip_limits (

ip_address TEXT PRIMARY KEY,

last_username TEXT,

last_trade_at TIMESTAMP WITH TIME ZONE

);B. Row Level Security (RLS) Policies

I implemented a "Service-Role Only" write architecture. While the public (`anon`) could read the market data to render charts, they could effectively never write to the database directly. All writes had to go through the Edge Functions (RPC).

Policy: Public Read Access

CREATE POLICY "public read markets" ON markets

FOR SELECT

TO anon

USING (true);Policy: Service-Only Write Access

CREATE POLICY "service only users" ON users

FOR ALL

TO service_role

USING (true)

WITH CHECK (true);3. The Pricing Engine: Solving the "Whale" Problem

The Problem:

My initial prototype used a Linear Bonding Curve (Price = k * Supply). This failed immediately. A single "whale" (a user with high capital, mostly a bot-manipulated user) bought 500 shares, pushing the price linearly to infinity and crashing the market liquidity.

The Solution:

I pivoted to a Sigmoid Bonding Curve. This acts as an Automated Market Maker (AMM).

- Low Demand: Price increases slowly.

- Mid Demand: Price accelerates (high volatility).

- High Demand: Price plateaus as it approaches the cap.

The Mathematics

To model this, I utilized the logistic function. The price P as a function of remaining supply x is defined as:

Where:

- k is the steepness (volatility).

- x₀ is the midpoint (where price action is most volatile).

- Pmin and Pmax are the hard floors and caps.

This formula ensures that as shares become scarce (x → 0), the price exponentially approaches the maximum, making it mathematically impossible to corner the entire market.

The Implementation (PL/pgSQL)

I moved the pricing logic inside the database using a Postgres RPC function (`handle_trade`) to ensure it executed atomically.

-- Calculate Price at Start

v_price_start := v_price_min + (v_price_max - v_price_min) /

(1 + EXP(-v_sigmoid_steepness * (((p_total_shares_cap - v_remaining_shares) / v_max_tradable_shares) - v_sigmoid_mid_point)));

-- Calculate Price at End (After shares are removed)

v_price_end := v_price_min + (v_price_max - v_price_min) /

(1 + EXP(-v_sigmoid_steepness * (((p_total_shares_cap - (v_remaining_shares - p_shares)) / v_max_tradable_shares) - v_sigmoid_mid_point)));

-- Execution Price = Average of Start and End

v_avg_price := (v_price_start + v_price_end) / 2.0;4. Advanced Features: Stop Loss & Recursive Execution

A major challenge was implementing Stop Losses in a serverless environment. Since I couldn't afford to run a 24/7 background worker to check prices, I used an Event-Driven Recursive Trigger.

The Logic:

Inside the `handle_trade` SQL function, after a trade executes and the price shifts, the system immediately checks the `stop_losses` table. If the new price crosses a user's trigger threshold, the function recursively calls itself to execute the stop-loss order within the same transaction block.

This ensured that stop losses were executed instantly with zero latency, purely within the database layer.

-- 8. TRIGGER STOP LOSSES (Recursion)

FOR v_sl_record IN

DELETE FROM stop_losses

WHERE shift_id = p_shift_id

AND ((shares < 0 AND trigger_price >= v_price_end) OR (shares > 0 AND trigger_price <= v_price_end))

RETURNING *

LOOP

-- Execute force trade

PERFORM handle_trade(v_sl_record.user_id, v_sl_record.shift_id, v_sl_record.shares, ... 'SYSTEM', TRUE);

END LOOP;5. Concurrency: The "Double Spend" Race Condition

The Problem:

With 200 trades per minute, Race Conditions were inevitable. If User A and User B buy the last 10 shares at the exact same millisecond, a standard `SELECT` then `UPDATE` would result in negative inventory (a double spend).

The Fix:

I enforced Pessimistic Locking using the `FOR UPDATE` clause.

-- LOCK ROWS (Concurrency Safety)

-- This line halts any other transaction trying to touch this specific market

-- until the current transaction commits or rolls back.

SELECT remaining_shares INTO v_remaining_shares

FROM markets

WHERE shift_id = p_shift_id FOR UPDATE;

-- LIQUIDITY CHECK (Atomic)

IF p_shares > 0 AND (v_remaining_shares - p_shares) < p_min_market_shares THEN

RAISE EXCEPTION 'Liquidity Limit: Cannot buy, market is dry.';

END IF;6. Security: The Bot War and "The Wipe"

As the platform trended, botnets arrived. They created thousands of accounts to farm the signup bonus and manipulate prices.

Evidence of bot manipulation:

Reddit Post 1 (Bot Manipulation)

Reddit Post 2 (Harshad Mehta Pump)

Reddit Post 3 (Market Boosting)

The Problem:

Banning an account wasn't enough; they would just create more. Furthermore, if I banned a bot that held 50% of the shares, those shares would be "frozen," destroying the market liquidity.

The Fix: "Scorched Earth" Protocol

I wrote a `wipeUserCompletely` function in Deno. It utilized heuristic analysis (e.g., >3 accounts created on one IP in <15 seconds).

Code Segment: The Deflationary Wipe

This function deletes the user and their positions but does not refund the shares to the market pool. The shares are effectively "burned." This prevents the price from crashing, preserving value for legitimate investors.

// Deno Edge Function

export async function wipeUserCompletely(supa: any, usernames: string[], ip: string) {

console.log(`[WIPE] Wiping users: ${usernames.join(", ")} on IP ${ip}`);

// 1. Delete Transactions (Erase history)

await supa.from("transactions").delete().in("user_id", usernames);

// 2. Delete Positions (The "Burn")

// We delete the ownership record, but we DO NOT increment 'remaining_shares'

// in the markets table. The supply stays constricted.

await supa.from("positions").delete().in("user_id", usernames);

// 3. Delete Authentication

await supa.from("accounts").delete().in("username", usernames);

await supa.from("users").delete().in("id", usernames);

}7. Architecture & Scalability Challenges

The "Realtime" Illusion

Supabase offers Realtime (WebSockets), but the free tier has concurrent connection limits. With 2,300+ users, I couldn't use it.

The Solution:

I implemented an optimized Polling Architecture. The client requested `delta` (changes since the last timestamp) every 3 seconds.

- Pros: Stateless, simple, worked within standard HTTP request quotas.

- Cons: Created massive egress traffic (13GB+), hitting 264% of my Supabase limit.

The Frontend Tax Bug

To reduce server load, I calculated the estimated tax (1.67%) on the client side using Vanilla JS.

The Mistake: I updated the Sigmoid `steepness` parameter on the backend SQL but forgot to push the update to the frontend `app.js`.

The Result: Users saw one price on the UI, but the trade executed at a slightly different price.

The Lesson: Never duplicate business logic. The database should be the Single Source of Truth.

8. The Community Irony

Perhaps the most educational aspect of this project wasn't the code, but the sociology.

I created a subreddit, r/betjee (link), to gather feedback and bug reports. It grew to over 30 active members quickly. However, as I focused entirely on fixing critical backend scaling issues (and studying for Physics), I was unable to moderate the community actively.

In a twist of irony, the creator of the subreddit, removed me from the moderation team. The community I built to discuss the algorithm and errors actively, is now, to my knowledge, being used for unrelated promotions.

It was a harsh lesson: Decentralized systems are great for code, but communities require centralized and trustworthy leadership.

9. Conclusion

BetJee was a crash course in high-frequency system design. I went from knowing nothing about what the stock market is to managing a trading engine with over 200 transactions a minute.

However, the reality of cloud costs and the imminent reality of my 12th-grade Board Exams forced me to shut it down.

Final reddit post: And it ends

The markets are closed. The servers are spinning down. It is time to open the textbooks. 😔